Collections in the Theforeman Namespace.Collections in the T_systems_mms Namespace.Collections in the Servicenow Namespace.Collections in the Purestorage Namespace.Collections in the Openvswitch Namespace.Collections in the Netapp_eseries Namespace.

#Pure storage upgrade#

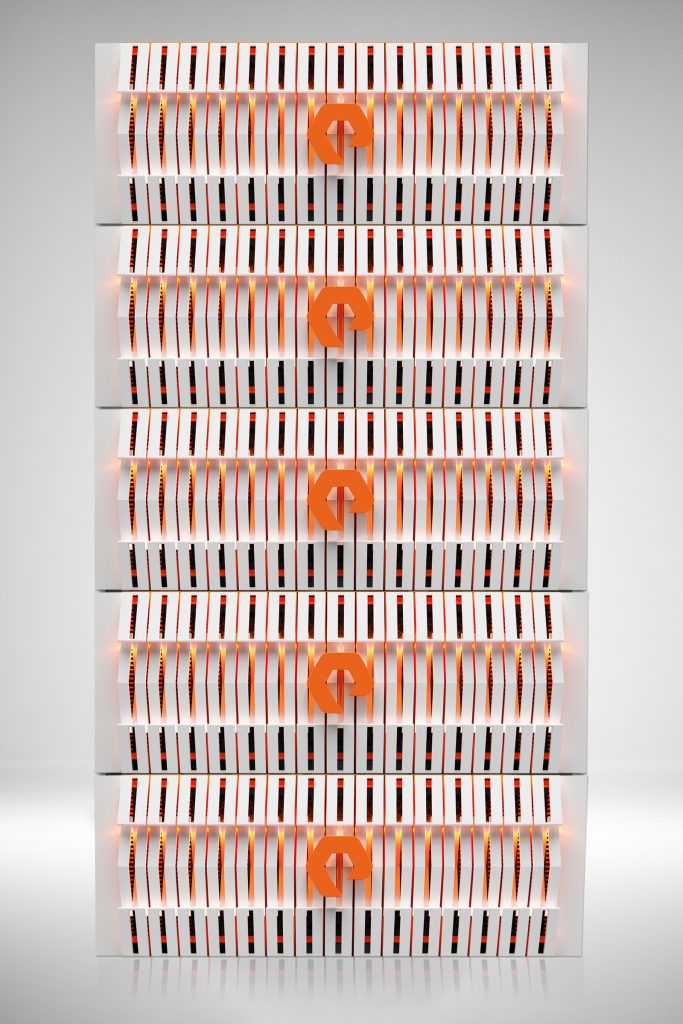

The timing of the read latency changes lines up EXACTLY with the upgrade time - in the case of the array in the below image (taken from 30-day performance data in Pure1), it was upgraded one week ago on 4/24 at 6 PM and the effect has been continuous since that date/time. We also see a reduction in overall reported capacity, but this was expected because our arrays meet the criteria defined in 6.1.14 fixlist item "Corrects capacity reporting on //C, //X and //XL arrays with large wide write groups (PURE-243865) " None of our workloads have changed I/O profiles in this same time period, and no new workloads were added to these arrays.

And while this may be an almost an imperceptible marginal effect from the perspective of external workloads, I suspect that internal Purity processes like additional pass dedupe and compression are suffering as a result on arrays with already medium-high to high load values on our busiest array this is causing issues with not being able to churn all the way through System space fully to redistribute into the Shared or Unique space buckets. In addition, the RANGE of read latency values is flatter and much less variable than before the upgrade. However, I'm seeing anywhere from a 15% increase in read latency on our lowest-utilization arrays to a nearly 70% increase on our heaviest-hit array. I hesitate to even use the word degraded because that implies that it is now bad or unacceptable, and all of our arrays are still sub-millisecond for read latency, which is certainly still fast. I am seeing evidence across multiple arrays in our fleet that were upgraded from 6.1.13 to 6.1.15 recently indicating array-level read latency performance is degraded after the upgrade.

0 kommentar(er)

0 kommentar(er)